BBQvec: A scalable vector search library

Intro

One of the more popular techniques in AI today is vector search: creating embedding vectors from models and storing them; then, given a user query, finding the most related stored vectors – either in the set, such as similar products, or not, as in similar text. Getting the truly closest vectors, without incurring a full-table-scan, is the goal of all these approximate nearest-neighbor algorithms.

At Daxe, we’re building Structured Semantic Search – a complete AI search stack. Our team leverages our collective experience from OpenAI, Google, Lyft, AWS, Harvard, Berkeley, and Darden to create novel technologies for developers and organizations to harness the full potential of their data. We’re passionate about AI sovereignty and owning your data on-premise – we test everything on our own basement GPU cluster and deploy it up to the largest enterprise solutions.

Daxe and Structured Semantic Search do far more than vector search; however, a vector relatedness signal is something we definitely have in our toolbox. As this problem is well-understood, and there are many alternatives out there, today we’re open-sourcing our vector indexing library and explaining how it works and where it fits into the open source AI community.

Why

At Daxe we use a combination of languages, and on the backend that’s generally Go and Rust. As we’re building our own data store, that ruled out using external vector databases; we needed an indexing library. Many good ones exist, such as FAISS and Annoy (or Arroy), and USearch, and while many covered some requirements, nothing covered all of them.

The biggest need, however, was scale; we need to handle many billions of vectors, with low index build time. This also means memory-only solutions are out, and we only want to compare the vectors, some of which may have to live on disk, without seeking too frequently. This also suggests that quantization and compression techniques, to allow the vectors to not fill the disk, are similarly important. Obviously, this has tradeoffs; the top of the line (a la HNSW) algorithms will have higher recall, but are also harder to scale.

We needed something else. So, we implemented a new vector index library, for Gophers and Rustaceans alike, based on a new algorithm we designed. We call it BBQvec and it’s open-source, and Apache-licensed, on Github now

The BBQvec Algorithm

A 2D Voting Parable

Suppose you (a vector) have a house in a neighborhood, in a city with some really active politics. Every week there’s a city council election. And every week, after the election, the city gets redistricted, and the voting districts are randomly redrawn.

This is rather annoying*. You and your nearest neighbors all want new bike lanes on your street, and want to vote together, but can’t, because sometimes you’re voting for the same council members and sometimes not.

The city’s philosophy, though, is that, on average, your neighborhood will vote for the same council members, and get your representation. Suppose you are House A. House B is your neighbor, C is a few blocks away, but D is on the other side of town. The district numbers you get to vote in may go like this:

This means that your nearest neighbors are the ones that vote in the same (or very similar) districts every week. You didn’t move; the lines around you did, but because you are physically close together, the voting roll has you in the same district more often. (Week 2, you may have been ‘accidentally’ gerrymandered)

The Algorithm In Short

The Annoy algorithm was definitely the seed of inspiration for BBQvec; splitting the space somewhat-randomly, and doing it multiple times, is a similar theme. Instead of building a forest of B-Trees by splitting planes, however, the randomness here comes first.

Select, once, a number of random orthonormal basis sets to cover the vector space

For every vector and its numerical ID, keep track of the index of its largest component. This is its ‘voting district’, also the face of the unit hypercube the vector points at, under each change of basis. Store these sets as bitmaps.

Quantize the vector, and save it on disk, keyed by ID.

At query time, do the same – take the query vector and find the list of largest component indexes

Find the other vector IDs that most frequently appear in each of the sets (ie, order by “total weeks together”).

Quantize the query vector, and directly compare and rank the matches.

Basis, Bitmap & Quantization. BBQvec.

Parameters

Another need we had was being able to dynamically shift how expensive each vector subquery would be to the overall query evaluation. This is another benefit to BBQvec.

There are intentionally few parameters at index creation time:

Vector Dimensionality: This defines the space you’re working in, usually determined by your embedding source or model.

Quantization Strategy: One advantage of this algorithm (like some of the alternative libraries, but not all) is that it’s completely agnostic to how quantization happens. Once a vector has been marked in the index bitmaps, the storage and comparison can be any quantization technique, and makes experimentation on the topic easy. This choice depends on how much disk you wish to trade off against fidelity to the raw vectors.

Total Number of Basis Sets: This doesn’t have to be very large – tens of basis sets work well for billions of vectors – but more sets will be more accurate, but take up more space and take longer to check. (This is somewhat akin to the Annoy strategy of multiple forests)

Of these, the number of basis sets is the one that takes any tuning – however, in testing, the number of bases needed is generally logarithmic to the desired maximum number of vectors to store in the index.

At query time, the parameters are also simple to understand and allow for the control we need.

K: The number of nearest neighbors to return, this is AKNN after all 🙂

Maximum Number of Vectors to Consider (SearchK): After intersecting enough ‘district’ bitmaps, if there are few enough candidates, we can stop and scan through all of them – when we reach this maximum number we brute-force the last mile. For more accurate, but slower searches, this number can be raised or lowered, to do more or fewer potential disk seeks, as desired.

Spill: We can widen our search – instead of looking at the sets for our largest component only, we can union our top three or four components. (As if we got to “vote in our closest N districts”)

Number of Basis Sets: While we can construct multiple basis sets, since they’re independent and random, we can use fewer of them at query time. By default, it should be the maximum, but fewer can allow for a quicker query.

Benchmarks

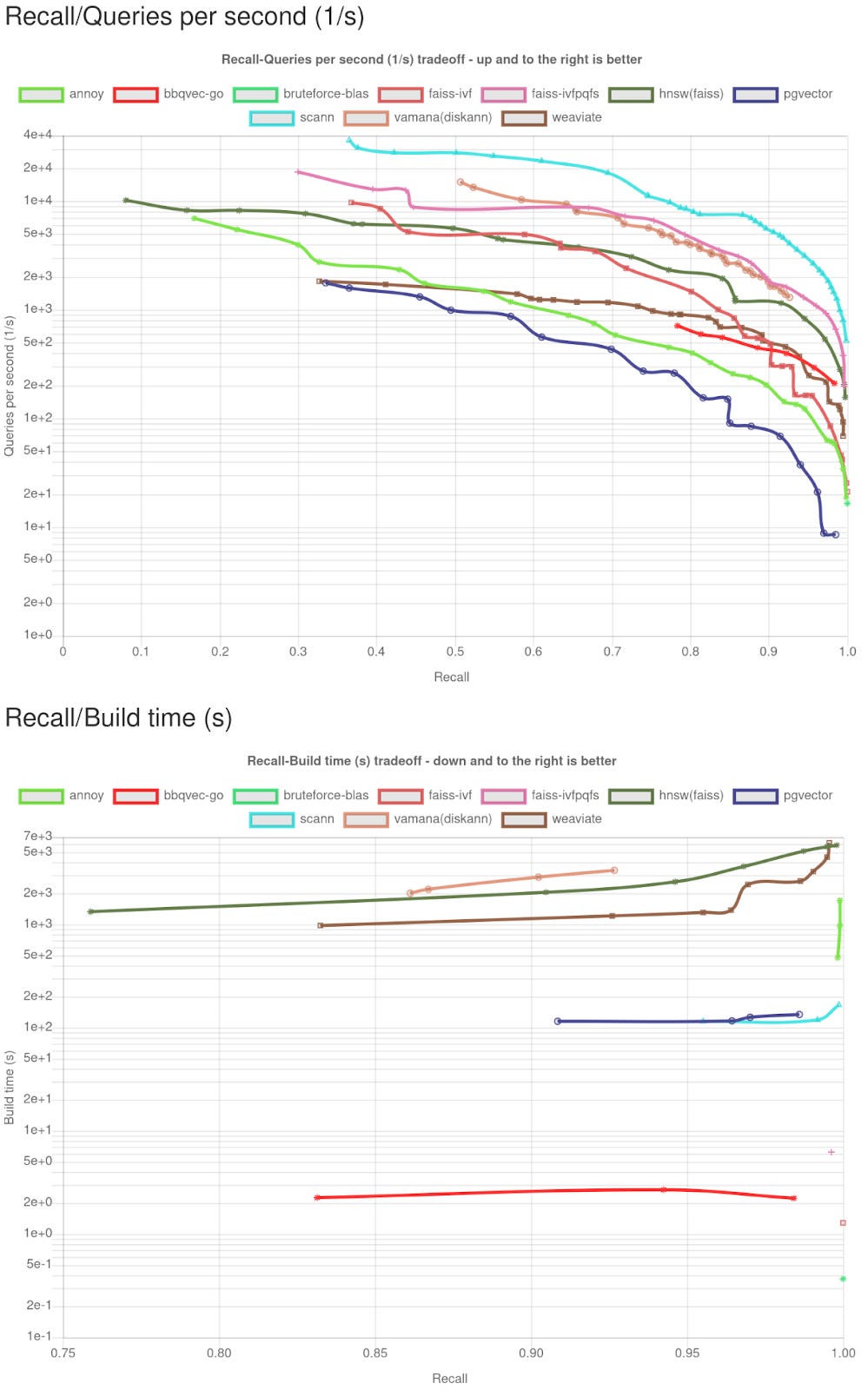

Fortunately, much work has been done in the space of benchmarking AKNN algorithms. Now that we’re releasing open source, we can properly add ourselves to https://ann-benchmarks.com, but we’re providing some early graphs to give a sense of the performance.

We know that BBQvec is not the fastest or most accurate algorithm in the flashiest top-line metric of Recall v QPS – simply decent, and in the middle of the pack. The biggest win, however, is in the time it takes to build the indexes: it’s orders of magnitude faster. This is an underrated superpower, especially when ingesting many billions of vectors at scale.

These graphs were produced from tests conducted on an EPYC 7402 with 24 cores and 128 GiB of memory. ANN-Benchmarks runs on an AWS r6i.16xlarge, Xeons with 64 cores and 512GiB of memory; arguably comparable if not more performant than ours. Furthermore, the version under test is the Go package – the Rust crate seems about 1.5-2x faster in local unit test benchmarks, so we’re also hopeful that those benchmarks will prove even more exciting.

The first two graphs are from the nytimes-256-angular dataset; with K=10 and 256 dimensions – the largest dimensionality in these benchmarks with 290K vectors stored. BBQvec-Go is always in bright red.

We also measured glove-100-angular – the largest number of vectors available on the ANN-Benchmarks main graphs, with 1.2M vectors and 100 dimensions (K=10)

Conclusion

We’d love to see what you build with BBQvec, and we’d love to see you at our future events and hackathons, to talk about how we’re innovating on AI search and retrieval. Subscribe to this blog, find us on Github, follow us on LinkedIn or Twitter/X, or join our WhatsApp Group.